Releases: huggingface/diffusers

v0.16.0 DeepFloyd IF & ControlNet v1.1

DeepFloyd's IF: The open-sourced Imagen

IF

IF is a pixel-based text-to-image generation model and was released in late April 2023 by DeepFloyd.

The model architecture is strongly inspired by Google's closed-sourced Imagen and a novel state-of-the-art open-source text-to-image model with a high degree of photorealism and language understanding:

Installation

pip install torch --upgrade # diffusers' IF is optimized for torch 2.0

pip install diffusers --upgrade

Accept the License

Before you can use IF, you need to accept its usage conditions. To do so:

- Make sure to have a Hugging Face account and be logged in

- Accept the license on the model card of DeepFloyd/IF-I-XL-v1.0

- Log-in locally

from huggingface_hub import login

login()and enter your Hugging Face Hub access token.

Code example

from diffusers import DiffusionPipeline

from diffusers.utils import pt_to_pil

import torch

# stage 1

stage_1 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-XL-v1.0", variant="fp16", torch_dtype=torch.float16)

stage_1.enable_model_cpu_offload()

# stage 2

stage_2 = DiffusionPipeline.from_pretrained(

"DeepFloyd/IF-II-L-v1.0", text_encoder=None, variant="fp16", torch_dtype=torch.float16

)

stage_2.enable_model_cpu_offload()

# stage 3

safety_modules = {

"feature_extractor": stage_1.feature_extractor,

"safety_checker": stage_1.safety_checker,

"watermarker": stage_1.watermarker,

}

stage_3 = DiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-x4-upscaler", **safety_modules, torch_dtype=torch.float16

)

stage_3.enable_model_cpu_offload()

prompt = 'a photo of a kangaroo wearing an orange hoodie and blue sunglasses standing in front of the eiffel tower holding a sign that says "very deep learning"'

generator = torch.manual_seed(1)

# text embeds

prompt_embeds, negative_embeds = stage_1.encode_prompt(prompt)

# stage 1

image = stage_1(

prompt_embeds=prompt_embeds, negative_prompt_embeds=negative_embeds, generator=generator, output_type="pt"

).images

pt_to_pil(image)[0].save("./if_stage_I.png")# stage 2

image = stage_2(

image=image,

prompt_embeds=prompt_embeds,

negative_prompt_embeds=negative_embeds,

generator=generator,

output_type="pt",

).images

pt_to_pil(image)[0].save("./if_stage_II.png")# stage 3

image = stage_3(prompt=prompt, image=image, noise_level=100, generator=generator).images

image[0].save("./if_stage_III.png")For more details about speed and memory optimizations, please have a look at the blog or docs below.

Useful links

👉 The official codebase

👉 Blog post

👉 Space Demo

👉 In-detail docs

ControlNet v1.1

Lvmin Zhang has released improved ControlNet checkpoints as well as a couple of new ones.

You can find all 🧨 Diffusers checkpoints here

Please have a look directly at the model cards on how to use the checkpoins:

Improved checkpoints:

| Model Name | Control Image Overview | Control Image Example | Generated Image Example |

|---|---|---|---|

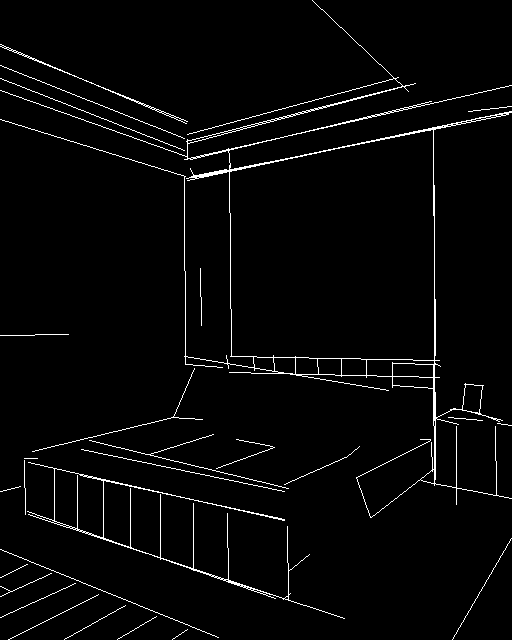

| lllyasviel/control_v11p_sd15_canny Trained with canny edge detection |

A monochrome image with white edges on a black background. |  |

|

| lllyasviel/control_v11p_sd15_mlsd Trained with multi-level line segment detection |

An image with annotated line segments. |  |

|

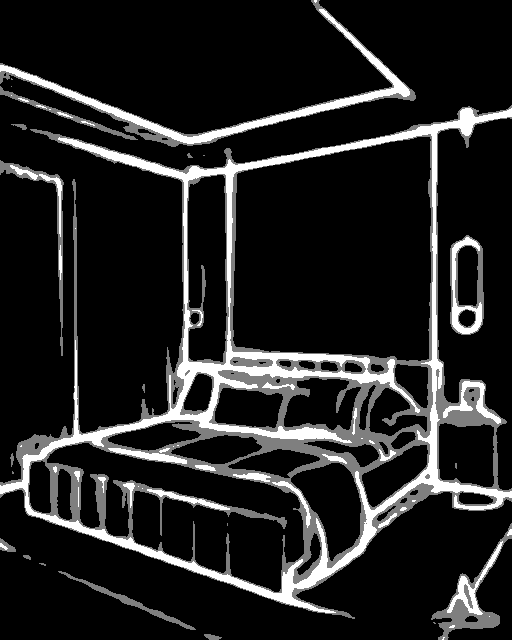

| lllyasviel/control_v11f1p_sd15_depth Trained with depth estimation |

An image with depth information, usually represented as a grayscale image. |  |

|

| lllyasviel/control_v11p_sd15_normalbae Trained with surface normal estimation |

An image with surface normal information, usually represented as a color-coded image. |  |

|

| lllyasviel/control_v11p_sd15_seg Trained with image segmentation |

An image with segmented regions, usually represented as a color-coded image. |  |

|

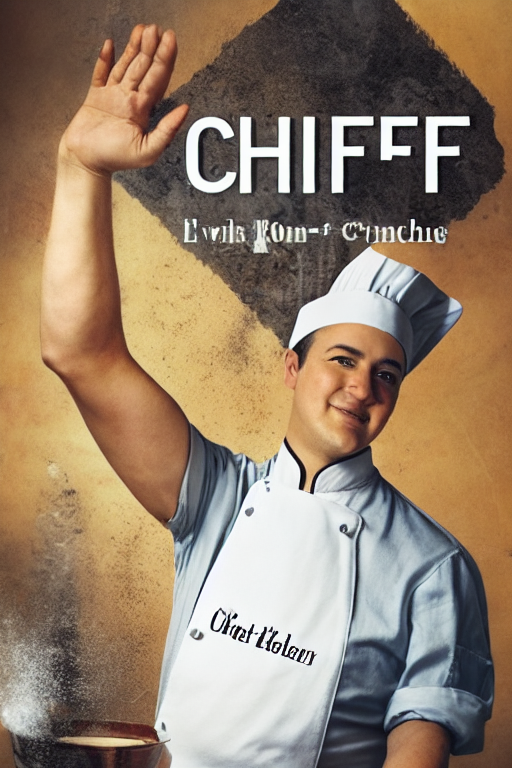

| lllyasviel/control_v11p_sd15_lineart Trained with line art generation |

An image with line art, usually black lines on a white background. |  |

|

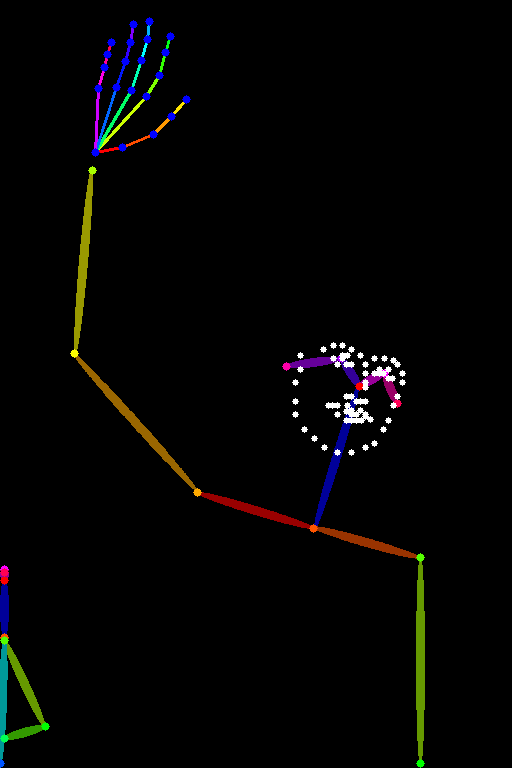

| lllyasviel/control_v11p_sd15_openpose Trained with human pose estimation |

An image with human poses, usually represented as a set of keypoints or skeletons. |  |

|

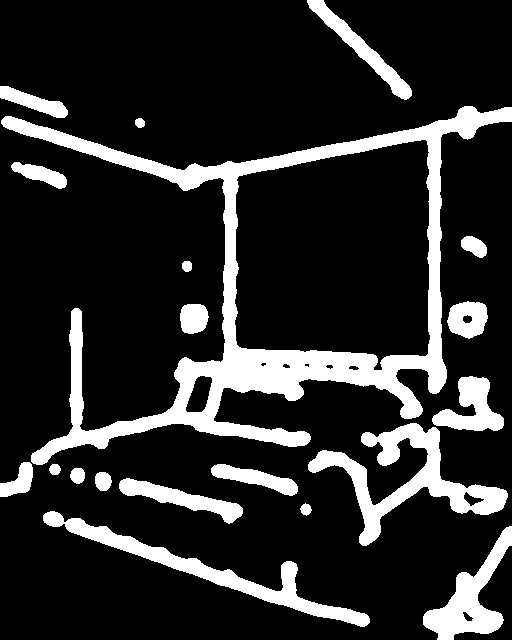

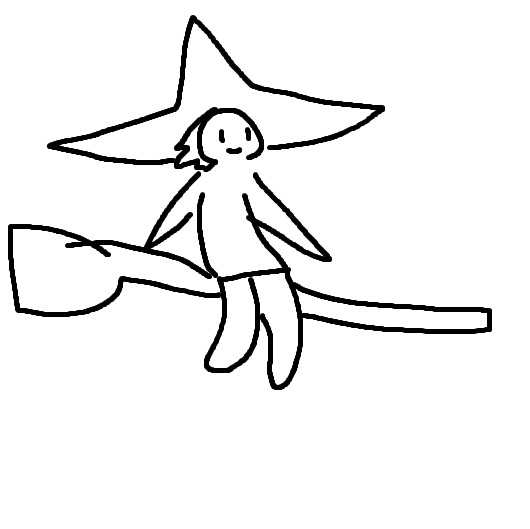

| lllyasviel/control_v11p_sd15_scribble Trained with scribble-based image generation |

An image with scribbles, usually random or user-drawn strokes. |  |

|

| lllyasviel/control_v11p_sd15_softedge Trained with soft edge image generation |

An image with soft edges, usually to create a more painterly or artistic effect. |  |

<img width="64" src="https://huggingface.co/lllyasviel/control_v11p_sd15_sof... |

v0.15.1: Patch Release to fix safety checker, config access and uneven scheduler

Fixes bugs related to missing global pooling in controlnet, img2img processor issue with safety checker, uneven timesteps and better config deprecation

- [Bug fix] Add global pooling to controlnet by @patrickvonplaten in #3121

- [Bug fix] Fix img2img processor with safety checker by @patrickvonplaten in #3127

- [Bug fix] Make sure correct timesteps are chosen for img2img by @patrickvonplaten in #3128

- [Bug fix] Fix config deprecation by @patrickvonplaten in #3129

v0.15.0 Beyond Image Generation

Taking Diffusers Beyond Image Generation

We are very excited about this release! It brings new pipelines for video and audio to diffusers, showing that diffusion is a great choice for all sorts of generative tasks. The modular, pluggable approach of diffusers was crucial to integrate the new models intuitively and cohesively with the rest of the library. We hope you appreciate the consistency of the APIs and implementations, as our ultimate goal is to provide the best toolbox to help you solve the tasks you're interested in. Don't hesitate to get in touch if you use diffusers for other projects!

In addition to that, diffusers 0.15 includes a lot of new features and improvements. From performance and deployment improvements (faster pipeline loading) to increased flexibility for creative tasks (Karras sigmas, weight prompting, support for Automatic1111 textual inversion embeddings) to additional customization options (Multi-ControlNet) to training utilities (ControlNet, Min-SNR weighting). Read on for the details!

🎬 Text-to-Video

Text-guided video generation is not a fantasy anymore - it's as simple as spinning up a colab and running any of the two powerful open-sourced video generation models.

Text-to-Video

Alibaba's DAMO Vision Intelligence Lab has open-sourced a first research-only video generation model that can generatae some powerful video clips of up to a minute. To see Darth Vader riding a wave simply copy-paste the following lines into your favorite Python interpreter:

import torch

from diffusers import DiffusionPipeline, DPMSolverMultistepScheduler

from diffusers.utils import export_to_video

pipe = DiffusionPipeline.from_pretrained("damo-vilab/text-to-video-ms-1.7b", torch_dtype=torch.float16, variant="fp16")

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

prompt = "Spiderman is surfing"

video_frames = pipe(prompt, num_inference_steps=25).frames

video_path = export_to_video(video_frames)For more information you can have a look at "damo-vilab/text-to-video-ms-1.7b"

Text-to-Video Zero

Text2Video-Zero is a zero-shot text-to-video synthesis diffusion model that enables low cost yet consistent video generation with only pre-trained text-to-image diffusion models using simple pre-trained stable diffusion models, such as Stable Diffusion v1-5. Text2Video-Zero also naturally supports cool extension works of pre-trained text-to-image models such as Instruct Pix2Pix, ControlNet and DreamBooth, and based on which we present Video Instruct Pix2Pix, Pose Conditional, Edge Conditional and, Edge Conditional and DreamBooth Specialized applications.

Ftb9VnoakAE_B7T.mp4

For more information please have a look at PAIR/Text2Video-Zero

🔉 Audio Generation

Text-guided audio generation has made great progress over the last months with many advances being based on diffusion models.

The 0.15.0 release includes two powerful audio diffusion models.

AudioLDM

Inspired by Stable Diffusion, AudioLDM

is a text-to-audio latent diffusion model (LDM) that learns continuous audio representations from CLAP

latents. AudioLDM takes a text prompt as input and predicts the corresponding audio. It can generate text-conditional

sound effects, human speech and music.

from diffusers import AudioLDMPipeline

import torch

repo_id = "cvssp/audioldm"

pipe = AudioLDMPipeline.from_pretrained(repo_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "Techno music with a strong, upbeat tempo and high melodic riffs"

audio = pipe(prompt, num_inference_steps=10, audio_length_in_s=5.0).audios[0]The resulting audio output can be saved as a .wav file:

import scipy

scipy.io.wavfile.write("techno.wav", rate=16000, data=audio)For more information see cvssp/audioldm

Spectrogram Diffusion

This model from the Magenta team is a MIDI to audio generator. The pipeline takes a MIDI file as input and autoregressively generates 5-sec spectrograms which are concated together in the end and decoded to audio via a Spectrogram decoder.

from diffusers import SpectrogramDiffusionPipeline, MidiProcessor

pipe = SpectrogramDiffusionPipeline.from_pretrained("google/music-spectrogram-diffusion")

pipe = pipe.to("cuda")

processor = MidiProcessor()

# Download MIDI from: wget http://www.piano-midi.de/midis/beethoven/beethoven_hammerklavier_2.mid

output = pipe(processor("beethoven_hammerklavier_2.mid"))

audio = output.audios[0]📗 New Docs

Documentation is crucially important for diffusers, as it's one of the first resources where people try to understand how everything works and fix any issues they are observing. We have spent a lot of time in this release reviewing all documents, adding new ones, reorganizing sections and bringing code examples up to date with the latest APIs. This effort has been led by @stevhliu (thanks a lot! 🙌) and @yiyixuxu, but many others have chimed in and contributed.

Check it out: https://huggingface.co/docs/diffusers/index

Don't hesitate to open PRs for fixes to the documentation, they are greatly appreciated as discussed in our (revised, of course) contribution guide.

🪄 Stable UnCLIP

Stable UnCLIP is the best open-sourced image variation model out there. Pass an initial image and optionally a prompt to generate variations of the image:

from diffusers import DiffusionPipeline

from diffusers.utils import load_image

import torch

pipe = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1-unclip-small", torch_dtype=torch.float16)

pipe.to("cuda")

# get image

url = "https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main/stable_unclip/tarsila_do_amaral.png"

image = load_image(url)

# run image variation

image = pipe(image).images[0]For more information you can have a look at "stabilityai/stable-diffusion-2-1-unclip"

Fsei9kLaUAM27yZ.mp4

🚀 More ControlNet

ControlNet was released in diffusers in version 0.14.0, but we have some exciting developments: Multi-ControlNet, a training script, and upcoming event and a community image-to-image pipeline contributed by @mikegarts!

Multi-ControlNet

Thanks to community member @takuma104, it's now possible to use several ControlNet conditioning models at once! It works with the same API as before, only supplying a list of ControlNets instead of just once:

import torch

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

controlnet_canny = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny",

torch_dtype=torch.float16).to("cuda")

controlnet_pose = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-openpose",

torch_dtype=torch.float16).to("cuda")

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"example/a-sd15-variant-model", torch_dtype=torch.float16,

controlnet=[controlnet_pose, controlnet_canny]

).to("cuda")

pose_image = ...

canny_image = ...

prompt = ...

image = pipe(prompt=prompt, image=[pose_image, canny_image]).images[0]And this is an example of how this affects generation:

| Control Image1 | Control Image2 | Generated |

|---|---|---|

|

|

|

|

(none) |  |

|

(none) |  |

ControlNet Training

We have created a training script for ControlNet, and can't wait to see what new ideas the community may come up with! In fact, we are so pumped about it that we are organizing a JAX Diffusers sprint with a special focus on ControlNet, where participant teams will be assigned TPUs v4-8 to work on their projects 🤯. Those are some mean machines, so make sure you join our discord to follow the event: https://discord.com/channels/879548962464493619/897387888663232554/1092751149217615902.

🐈⬛ Textual Inversion, Revisited

Several great contributors have been working on textual inversion to get the most of it. @isamu-isozaki made it possible to perform multitoken training, and @pies...

ControlNet, 8K VAE decoding

🚀 ControlNet comes to 🧨 Diffusers!

Thanks to an amazing collaboration with community member @takuma104 🙌, diffusers fully supports ControlNet! All 8 control models from the paper are available for you to use: depth, scribbles, edges, and more. Best of all is that you can take advantage of all the other goodies and optimizations that Diffusers provides out of the box, making this an ultra fast implementation of ControlNet. Take it for a spin to see for yourself.

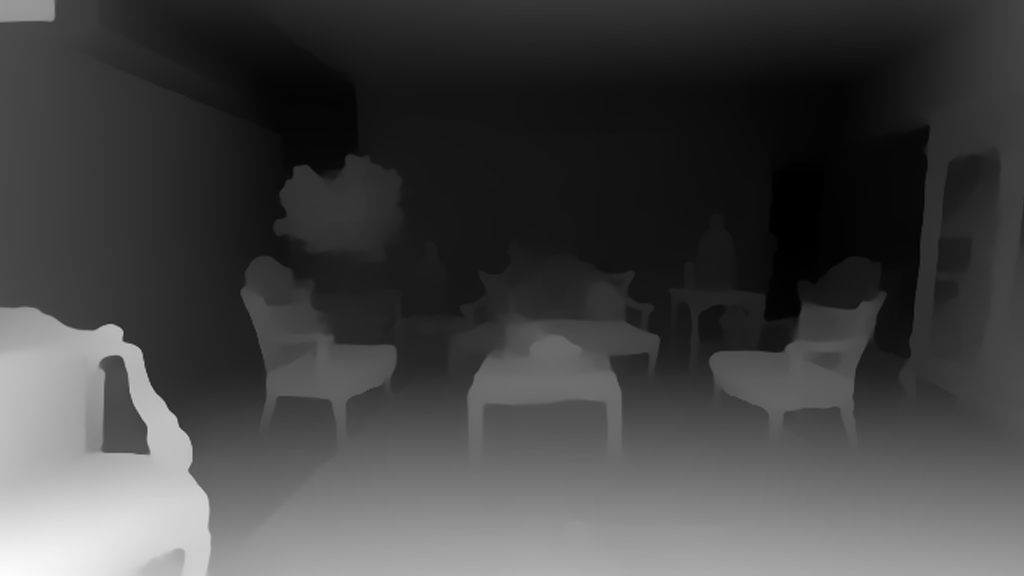

ControlNet works by training a copy of some of the layers of the original Stable Diffusion model on additional signals, such as depth maps or scribbles. After training, you can provide a depth map as a strong hint of the composition you want to achieve, and have Stable Diffusion fill in the details for you. For example:

| Before | After |

|---|---|

|

|

Currently, there are 8 published control models, all of which were trained on runwayml/stable-diffusion-v1-5 (i.e., Stable Diffusion version 1.5). This is an example that uses the scribble controlnet model:

| Before | After |

|---|---|

|

|

Or you can turn a cartoon into a realistic photo with incredible coherence:

How do you use ControlNet in diffusers? Just like this (example for the canny edges control model):

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

import torch

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)As usual, you can use all the features in the diffusers toolbox: super-fast schedulers, memory-efficient attention, model offloading, etc. We think 🧨 Diffusers is the best way to iterate on your ControlNet experiments!

Please, refer to our blog post and documentation for details.

(And, coming soon, ControlNet training – stay tuned!)

💠 VAE tiling for ultra-high resolution generation

Another community member, @kig, conceived, proposed and fully implemented an amazing PR that allows generation of ultra-high resolution images without memory blowing up 🤯. They follow a tiling approach during the image decoding phase of the process, generating a piece of the image at a time and then stitching them all together. Tiles are blended carefully to avoid visible seems between them, and the final result is amazing. This is the additional code you need to use to enjoy high-resolution generations:

pipe.vae.enable_tiling()That's it!

For a complete example, refer to the PR or the code snippet we reproduce here for your convenience:

import torch

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", revision="fp16", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

pipe.enable_xformers_memory_efficient_attention()

pipe.vae.enable_tiling()

prompt = "a beautiful landscape photo"

image = pipe(prompt, width=4096, height=2048, num_inference_steps=10).images[0]

image.save("4k_landscape.jpg")All commits

- [Docs] Add a note on SDEdit by @sayakpaul in #2433

- small bugfix at StableDiffusionDepth2ImgPipeline call to check_inputs and batch size calculation by @mikegarts in #2423

- add demo by @yiyixuxu in #2436

- fix: code snippet of instruct pix2pix from the docs. by @sayakpaul in #2446

- Update train_text_to_image_lora.py by @haofanwang in #2464

mpstest fixes by @pcuenca in #2470- Fix test

train_unconditionalby @pcuenca in #2481 - add MultiDiffusion to controlling generation by @omerbt in #2490

- image_noiser -> image_normalizer comment by @williamberman in #2496

- [Safetensors] Make sure metadata is saved by @patrickvonplaten in #2506

- Add 4090 benchmark (PyTorch 2.0) by @pcuenca in #2503

- [Docs] Improve safetensors by @patrickvonplaten in #2508

- Disable ONNX tests by @patrickvonplaten in #2509

- attend and excite batch test causing timeouts by @williamberman in #2498

- move pipeline based test skips out of pipeline mixin by @williamberman in #2486

- pix2pix tests no write to fs by @williamberman in #2497

- [Docs] Include more information in the "controlling generation" doc by @sayakpaul in #2434

- Use "hub" directory for cache instead of "diffusers" by @pcuenca in #2005

- Sequential cpu offload: require accelerate 0.14.0 by @pcuenca in #2517

- is_safetensors_compatible refactor by @williamberman in #2499

- [Copyright] 2023 by @patrickvonplaten in #2524

- Bring Flax attention naming in sync with PyTorch by @pcuenca in #2511

- [Tests] Fix slow tests by @patrickvonplaten in #2526

- PipelineTesterMixin parameter configuration refactor by @williamberman in #2502

- Add a ControlNet model & pipeline by @takuma104 in #2407

- 8k Stable Diffusion with tiled VAE by @kig in #1441

- Textual inv make save log both steps by @isamu-isozaki in #2178

- Fix convert SD to diffusers error by @fkunn1326 in #1979)

- Small fixes for controlnet by @patrickvonplaten in #2542

- Fix ONNX checkpoint loading by @anton-l in #2544

- [Model offload] Add nice warning by @patrickvonplaten in #2543

Significant community contributions

The following contributors have made significant changes to the library over the last release:

- @takuma104

- Add a ControlNet model & pipeline (#2407)

New Contributors

- @mikegarts made their first contribution in #2423

- @fkunn1326 made their first contribution in #2529

Full Changelog: v0.13.0...v0.14.0

v0.13.1: Patch Release to fix warning when loading from `revision="fp16"`

- fix transformers naming by @patrickvonplaten in #2430

- remove author names. by @sayakpaul in #2428

- Fix deprecation warning by @patrickvonplaten in #2426

- fix the get_indices function by @yiyixuxu in #2418

- Update pipeline_utils.py by @haofanwang in #2415

Controllable Generation: Pix2Pix0, Attend and Excite, SEGA, SAG, ...

🎯 Controlling Generation

There has been much recent work on fine-grained control of diffusion networks!

Diffusers now supports:

- Instruct Pix2Pix

- Pix2Pix 0, more details in docs

- Attend and excite, more details in docs

- Semantic guidance, more details in docs

- Self-attention guidance, more details in docs

- Depth2image

- MultiDiffusion panorama, more details in docs

See our doc on controlling image generation and the individual pipeline docs for more details on the individual methods.

🆙 Latent Upscaler

Latent Upscaler is a diffusion model that is designed explicitly for Stable Diffusion. You can take the generated latent from Stable Diffusion and pass it into the upscaler before decoding with your standard VAE. Or you can take any image, encode it into the latent space, use the upscaler, and decode it. It is incredibly flexible and can work with any SD checkpoints.

| Original output image | 2x upscaled output image |

|---|---|

|

|

The model was developed by Katherine Crowson in collaboration with Stability AI

from diffusers import StableDiffusionLatentUpscalePipeline, StableDiffusionPipeline

import torch

pipeline = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16)

pipeline.to("cuda")

upscaler = StableDiffusionLatentUpscalePipeline.from_pretrained("stabilityai/sd-x2-latent-upscaler", torch_dtype=torch.float16)

upscaler.to("cuda")

prompt = "a photo of an astronaut high resolution, unreal engine, ultra realistic"

generator = torch.manual_seed(33)

# we stay in latent space! Let's make sure that Stable Diffusion returns the image

# in latent space

low_res_latents = pipeline(prompt, generator=generator, output_type="latent").images

upscaled_image = upscaler(

prompt=prompt,

image=low_res_latents,

num_inference_steps=20,

guidance_scale=0,

generator=generator,

).images[0]

# Let's save the upscaled image under "upscaled_astronaut.png"

upscaled_image.save("astronaut_1024.png")

# as a comparison: Let's also save the low-res image

with torch.no_grad():

image = pipeline.decode_latents(low_res_latents)

image = pipeline.numpy_to_pil(image)[0]

image.save("astronaut_512.png")⚡ Optimization

In addition to new features and an increasing number of pipelines, diffusers cares a lot about performance. This release brings a number of optimizations that you can turn on easily.

xFormers

Memory efficient attention, as implemented by xFormers, has been available in diffusers for some time. The problem was that installing xFormers could be complicated because there were no official pip wheels (or they were outdated), and you had to resort to installing from source.

From xFormers 0.0.16, official pip wheels are now published with every release, so installing and using xFormers is now as simple as these two steps:

pip install xformersin your terminal.pipe.enable_xformers_memory_efficient_attention()in your code to opt-in in your pipelines.

These actions will unlock dramatic memory savings, and usually faster inference too!

See more details in the documentation.

Torch 2.0

Speaking of memory-efficient attention, Accelerated PyTorch 2.0 Transformers now comes with built-in native support for it! When PyTorch 2.0 is released you'll no longer have to install xFormers or any third-party package to take advantage of it. In diffusers we are already preparing for that, and it works out of the box. So, if you happen to be using the latest "nightlies" of PyTorch 2.0 beta, then you're all set – diffusers will use Accelerated PyTorch 2.0 Transformers by default.

In our tests, the built-in PyTorch 2.0 implementation is usually as fast as xFormers', and sometimes even faster. Performance depends on the card you are using and whether you run your code in float16 or float32, so check our documentation for details.

Coarse-grained CPU offload

Community member @keturn, with whom we have enjoyed thoughtful software design conversations, called our attention to the fact that enabling sequential cpu offloading via enable_sequential_cpu_offload worked great to save a lot of memory, but made inference much slower.

This is because enable_sequential_cpu_offload() is optimized for memory, and it recursively works across all the submodules contained in a model, moving them to GPU when they are needed and back to CPU when another submodule needs to run. These cpu-to-gpu-to-cpu transfers happen hundreds of times during the stable diffusion denoising loops, because the UNet runs multiple times and it consists of several PyTorch modules.

This release of diffusers introduces a coarser enable_model_cpu_offload() pipeline API, which copies whole models (not modules) to GPU and makes sure they stay there until another model needs to run. The consequences are:

- Less memory savings than

enable_sequential_cpu_offload, but: - Almost as fast inference as when the pipeline is used without any type of offloading.

Pix2Pix Zero

Remember the CycleGAN days where one would turn a horse into a zebra in an image while keeping the rest of the content almost untouched? Well, that day has arrived but in the context of Diffusion. Pix2Pix Zero allows users to edit a particular image (be it real or generated), targeting a source concept (horse, for example) and replacing it with a target concept (zebra, for example).

| Input image | Edited image |

|---|---|

|

|

Pix2Pix Zero was proposed in Zero-shot Image-to-Image Translation. The StableDiffusionPix2PixZeroPipeline allows you to

- Edit an image generated from an input prompt

- Provide an input image and edit it

For the latter, it uses the newly introduced DDIMInverseScheduler to first obtain the inverted noise from the input image and use that in the subsequent generation process.

Both of the use cases leverage the idea of "edit directions", used for steering the generation toward the target concept gradually from the source concept. To know more, we recommend checking out the official documentation.

Attend and excite

Attend-and-Excite: Attention-Based Semantic Guidance for Text-to-Image Diffusion Models. Attend-and-Excite, guides the generative model to modify the cross-attention values during the image synthesis process to generate images that more faithfully depict the input text prompt. It allows creating images that are more semantically faithful with respect to the input text prompts. Thanks to community contributor @evinpinar for leading the charge to add this pipeline!

- Attend and excite 2 by @evinpinar @yiyixuxu #2369

Semantic guidance

Semantic Guidance for Diffusion Models was proposed in SEGA: Instructing Diffusion using Semantic Dimensions and provides strong semantic control over image generation. Small changes to the text prompt usually result in entirely different output images. However, with SEGA, a variety of changes to the image are enabled that can be controlled easily and intuitively and stay true to the original image composition. Thanks to the lead author of SEFA, Manuel (@manuelbrack), who added the pipeline in #2223.

Here is a simple demo:

import torch

from diffusers import SemanticStableDiffusionPipeline

pipe = SemanticStableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

out = pipe(

prompt="a photo of the face of a woman",

num_images_per_prompt=1,

guidance_scale=7,

editing_prompt=[

"smiling, smile", # Concepts to apply

"glasses, wearing glasses",

"curls, wavy hair, curly hair",

"beard, full beard, mustache",

],

reverse_editing_direction=[False, False, False, False], # Direction of guidance i.e. increase all concepts

edit_warmup_steps=[10, 10, 10, 10], # Warmup period for each concept

...v0.12.1: Patch Release to fix local files only

Make sure cached models can be loaded in offline mode.

- Don't call the Hub if

local_files_onlyis specifiied by @patrickvonplaten in #2119

Instruct-Pix2Pix, DiT, LoRA

🪄 Instruct-Pix2Pix

Instruct-Pix2Pix is a Stable Diffusion model fine-tuned for editing images from human instructions. Given an input image and a written instruction that tells the model what to do, the model follows these instructions to edit the image.

The model was released with the paper InstructPix2Pix: Learning to Follow Image Editing Instructions. More information about the model can be found in the paper.

pip install diffusers transformers safetensors accelerate

import PIL

import requests

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline

model_id = "timbrooks/instruct-pix2pix"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

url = "https://huggingface.co/datasets/diffusers/diffusers-images-docs/resolve/main/mountain.png"

def download_image(url):

image = PIL.Image.open(requests.get(url, stream=True).raw)

image = PIL.ImageOps.exif_transpose(image)

image = image.convert("RGB")

return image

image = download_image(url)

prompt = "make the mountains snowy"

edit = pipe(prompt, image=image, num_inference_steps=20, image_guidance_scale=1.5, guidance_scale=7).images[0]

images[0].save("snowy_mountains.png")- Add InstructPix2Pix pipeline by @patil-suraj #2040

🤖 DiT

Diffusion Transformers (DiTs) is a class conditional latent diffusion model which replaces the commonly used U-Net backbone with a transformer operating on latent patches. The pretrained model is trained on the ImageNet-1K dataset and is able to generate class conditional images of 256x256 or 512x512 pixels.

The model was released with the paper Scalable Diffusion Models with Transformers.

import torch

from diffusers import DiTPipeline

model_id = "facebook/DiT-XL-2-256"

pipe = DiTPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

# pick words that exist in ImageNet

words = ["white shark", "umbrella"]

class_ids = pipe.get_label_ids(words)

output = pipe(class_labels=class_ids)

image = output.images[0] # label 'white shark'⚡ LoRA

LoRA is a technique for performing parameter-efficient fine-tuning for large models. LoRA works by adding so-called "update matrices" to specific blocks of a pre-trained model. During fine-tuning, only these update matrices are updated while the pre-trained model parameters are kept frozen. This allows us to achieve greater memory efficiency as well as easier portability during fine-tuning.

LoRA was proposed in LoRA: Low-Rank Adaptation of Large Language Models. In the original paper, the authors investigated LoRA for fine-tuning large language models like GPT-3. cloneofsimo was the first to try out LoRA training for Stable Diffusion in the popular lora GitHub repository.

Diffusers now supports LoRA! This means you can now fine-tune a model like Stable Diffusion using consumer GPUs like Tesla T4 or RTX 2080 Ti. LoRA support was added to UNet2DConditionModel and DreamBooth training script by @patrickvonplaten in #1884.

By using LoRA, the fine-tuned checkpoints will be just 3 MBs in size. After fine-tuning, you can use the LoRA checkpoints like so:

from diffusers import StableDiffusionPipeline

import torch

model_path = "sayakpaul/sd-model-finetuned-lora-t4"

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16)

pipe.unet.load_attn_procs(model_path)

pipe.to("cuda")

prompt = "A pokemon with blue eyes."

image = pipe(prompt, num_inference_steps=30, guidance_scale=7.5).images[0]

image.save("pokemon.png")You can follow these resources to know more about how to use LoRA in diffusers:

- text2image fine-tuning script (by @sayakpaul in #2031).

- Official documentation discussing how LoRA is supported (by @sayakpaul in #2086).

📐 Customizable Cross Attention

LoRA leverages a new method to customize the cross attention layers deep in the UNet. This can be useful for other creative approaches such as Prompt-to-Prompt, and it makes it easier to apply optimizers like xFormers. This new "attention processor" abstraction was created by @patrickvonplaten in #1639 after discussing the design with the community, and we have used it to rewrite our xFormers and attention slicing implementations!

🌿 Flax => PyTorch

A long requested feature, prolific community member @camenduru took up the gauntlet in #1900 and created a way to convert Flax model weights for PyTorch. This means that you can train or fine-tune models super fast using Google TPUs, and then convert the weights to PyTorch for everybody to use. Thanks @camenduru!

🌀 Flax Img2Img

Another community member, @dhruvrnaik, ported the image-to-image pipeline to Flax in #1355! Using a TPU v2-8 (available in Colab's free tier), you can generate 8 images at once in a few seconds!

🎲 DEIS Scheduler

DEIS (Diffusion Exponential Integrator Sampler) is a new fast mult step scheduler that can generate high-quality samples in fewer steps.

The scheduler was introduced in the paper Fast Sampling of Diffusion Models with Exponential Integrator. More information about the scheduler can be found in the paper.

from diffusers import StableDiffusionPipeline, DEISMultistepScheduler

import torch

pipe = StableDiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16)

pipe.scheduler = DEISMultistepScheduler.from_config(pipe.scheduler.config)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

generator = torch.Generator(device="cuda").manual_seed(0)

image = pipe(prompt, generator=generator, num_inference_steps=25).images[0Reproducibility

One can now pass CPU generators to all pipelines even if the pipeline is on GPU. This ensures

much better reproducibility across GPU hardware:

import torch

from diffusers import DDIMPipeline

import numpy as np

model_id = "google/ddpm-cifar10-32"

# load model and scheduler

ddim = DDIMPipeline.from_pretrained(model_id)

ddim.to("cuda")

# create a generator for reproducibility

generator = torch.manual_seed(0)

# run pipeline for just two steps and return numpy tensor

image = ddim(num_inference_steps=2, output_type="np", generator=generator).images

print(np.abs(image).sum())See: #1902 and https://huggingface.co/docs/diffusers/using-diffusers/reproducibility

Important New Guides

- Stable Diffusion 101: https://huggingface.co/docs/diffusers/stable_diffusion

- Reproducibility: https://huggingface.co/docs/diffusers/using-diffusers/reproducibility

- LoRA: https://huggingface.co/docs/diffusers/training/lora

Important Bug Fixes

- Don't download safetensors if library is not installed: #2057

- Make sure that

save_pretrained(...)doesn't accidentally delete files: #2038 - Fix CPU offload docs for maximum memory gain: #1968

- Fix conversion for exotically sorted weight names: #1959

- Fix intermediate checkpointing for textual inversion, thanks @lstein #2072

All commits

- update composable diffusion for an updated diffuser library by @nanlliu in #1697

- [Tests] Fix UnCLIP cpu offload tests by @anton-l in #1769

- Bump to 0.12.0.dev0 by @anton-l in #1771

- [Dreambooth] flax fixes by @pcuenca in #1765

- update train_unconditional_ort.py by @prathikr in #1775

- Only test for xformers when enabling them #1773 by @kig in #1776

- expose polynomial:power and cosine_with_restarts:num_cycles params by @zetyquickly in #1737

- [Flax] Stateless schedulers, fixes and refactors by @skirsten in #1661

- Correct hf hub download by @patrickvonplaten in #1767

- Dreambooth docs: minor fixes by @pcuenca in #1758

- Fix num images per prompt unclip by @patil-suraj in #1787

- Add Flax stable diffusion img2img pipeline by @dhruvrnaik in #1355

- Refactor cross attention and allow mechanism to tweak cross attention function by @patrickvonplaten in #1639

- Fix OOM when using PyTorch with JAX installed. by @pcuenca in #1795

- reorder model wrap + bug fix by @prathikr in #1799

- Remove hardcoded names from PT scripts by @patrickvonplaten in #1778

- [textual_inversion] unwrap_model text encoder before accessing weights by @patil-suraj in #1816

- fix small mistake in annotation: 32 -> 64 by @Line290 in #1780

- Make safety_checker optional in more pipelines by @pcuenca in #1796

- Device to use (e.g. cpu, cuda:0, cuda:1, etc.) by @camenduru in #1844

- Avoid duplicating PyTorch + safetensors downloads. by @pcuenca in #1836

- Width was typod as weight by @Helw150 in #1800

- fix: resize transform now preserves aspect ratio by @parlance-zz in #1804

- Make xformers optional even if it is available by @kn in #1753

- Allow selecting precision to make Dreambooth class images by @kabachuha in #1832

- unCLIP image variation by @williamberman in #1781

- [Community Pipeline] MagicMix ...

v0.11.1: Patch release

This patch release fixes a bug with num_images_per_prompt in the UnCLIPPipeline

- Fix num images per prompt unclip by @patil-suraj in #1787

v0.11.0: Karlo UnCLIP, safetensors, pipeline versions

🪄 Karlo UnCLIP by Kakao Brain

Karlo is a text-conditional image generation model based on OpenAI's unCLIP architecture with the improvement over the standard super-resolution model from 64px to 256px, recovering high-frequency details in a small number of denoising steps.

This alpha version of Karlo is trained on 115M image-text pairs, including COYO-100M high-quality subset, CC3M, and CC12M.

For more information about the architecture, see the Karlo repository: https://github.com/kakaobrain/karlo

pip install diffusers transformers safetensors accelerate

import torch

from diffusers import UnCLIPPipeline

pipe = UnCLIPPipeline.from_pretrained("kakaobrain/karlo-v1-alpha", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a high-resolution photograph of a big red frog on a green leaf."

image = pipe(prompt).images[0] Community pipeline versioning

Community pipeline versioning

The community pipelines hosted in diffusers/examples/community will now follow the installed version of the library.

E.g. if you have diffusers==0.9.0 installed, the pipelines from the v0.9.0 branch will be used: https://github.com/huggingface/diffusers/tree/v0.9.0/examples/community

If you've installed diffusers from source, e.g. with pip install git+https://github.com/huggingface/diffusers then the latest versions of the pipelines will be fetched from the main branch.

To change the custom pipeline version, set the custom_revision variable like so:

pipeline = DiffusionPipeline.from_pretrained(

"google/ddpm-cifar10-32", custom_pipeline="one_step_unet", custom_revision="0.10.2"

)🦺 safetensors

Many of the most important checkpoints now have https://github.com/huggingface/safetensors available. Upon installing safetensors with:

pip install safetensors

You will see a nice speed-up when loading your model 🚀

Some of the most improtant checkpoints have safetensor weights added now:

- https://huggingface.co/stabilityai/stable-diffusion-2

- https://huggingface.co/stabilityai/stable-diffusion-2-1

- https://huggingface.co/stabilityai/stable-diffusion-2-depth

- https://huggingface.co/stabilityai/stable-diffusion-2-inpainting

Batched generation bug fixes 🐛

- Make sure all pipelines can run with batched input by @patrickvonplaten in #1669

We fixed a lot of bugs for batched generation. All pipelines should now correctly process batches of prompts and images 🤗

Also we made it much easier to tweak images with reproducible seeds:

https://huggingface.co/docs/diffusers/using-diffusers/reusing_seeds

📝 Changelog

- Remove spurious arg in training scripts by @pcuenca in #1644

- dreambooth: fix #1566: maintain fp32 wrapper when saving a checkpoint to avoid crash when running fp16 by @timh in #1618

- Allow k pipeline to generate > 1 images by @pcuenca in #1645

- Remove unnecessary offset in img2img by @patrickvonplaten in #1653

- Remove unnecessary kwargs in depth2img by @maruel in #1648

- Add text encoder conversion by @lawfordp2017 in #1559

- VersatileDiffusion: fix input processing by @LukasStruppek in #1568

- tensor format ort bug fix by @prathikr in #1557

- Deprecate init image correctly by @patrickvonplaten in #1649

- fix bug if we don't do_classifier_free_guidance by @MKFMIKU in #1601

- Handle missing global_step key in scripts/convert_original_stable_diffusion_to_diffusers.py by @Cyberes in #1612

- [SD] Make sure scheduler is correct when converting by @patrickvonplaten in #1667

- [Textual Inversion] Do not update other embeddings by @patrickvonplaten in #1665

- Added Community pipeline for comparing Stable Diffusion v1.1-4 checkpoints by @suvadityamuk in #1584

- Fix wrong type checking in

convert_diffusers_to_original_stable_diffusion.pyby @apolinario in #1681 - [Version] Bump to 0.11.0.dev0 by @patrickvonplaten in #1682

- Dreambooth: save / restore training state by @pcuenca in #1668

- Disable telemetry when DISABLE_TELEMETRY is set by @w4ffl35 in #1686

- Change one-step dummy pipeline for testing by @patrickvonplaten in #1690

- [Community pipeline] Add github mechanism by @patrickvonplaten in #1680

- Dreambooth: use warnings instead of logger in parse_args() by @pcuenca in #1688

- manually update train_unconditional_ort by @prathikr in #1694

- Remove all local telemetry by @anton-l in #1702

- Update main docs by @patrickvonplaten in #1706

- [Readme] Clarify package owners by @anton-l in #1707

- Fix the bug that torch version less than 1.12 throws TypeError by @chinoll in #1671

- RePaint fast tests and API conforming by @anton-l in #1701

- Add state checkpointing to other training scripts by @pcuenca in #1687

- Improve pipeline_stable_diffusion_inpaint_legacy.py by @cyber-meow in #1585

- apply amp bf16 on textual inversion by @jiqing-feng in #1465

- Add examples with Intel optimizations by @hshen14 in #1579

- Added a README page for docs and a "schedulers" page by @yiyixuxu in #1710

- Accept latents as optional input in Latent Diffusion pipeline by @daspartho in #1723

- Fix ONNX img2img preprocessing and add fast tests coverage by @anton-l in #1727

- Fix ldm tests on master by not running the CPU tests on GPU by @patrickvonplaten in #1729

- Docs: recommend xformers by @pcuenca in #1724

- Nightly integration tests by @anton-l in #1664

- [Batched Generators] This PR adds generators that are useful to make batched generation fully reproducible by @patrickvonplaten in #1718

- Fix ONNX img2img preprocessing by @peterto in #1736

- Fix MPS fast test warnings by @anton-l in #1744

- Fix/update the LDM pipeline and tests by @anton-l in #1743

- kakaobrain unCLIP by @williamberman in #1428

- [fix] pipeline_unclip generator by @williamberman in #1751

- unCLIP docs by @williamberman in #1754

- Correct help text for scheduler_type flag in scripts. by @msiedlarek in #1749

- Add resnet_time_scale_shift to VD layers by @anton-l in #1757

- Add attention mask to uclip by @patrickvonplaten in #1756

- Support attn2==None for xformers by @anton-l in #1759

- [UnCLIPPipeline] fix num_images_per_prompt by @patil-suraj in #1762

- Add CPU offloading to UnCLIP by @anton-l in #1761

- [Versatile] fix attention mask by @patrickvonplaten in #1763

- [Revision] Don't recommend using revision by @patrickvonplaten in #1764

- [Examples] Update train_unconditional.py to include logging argument for Wandb by @ash0ts in #1719

- Transformers version req for UnCLIP by @anton-l in #1766